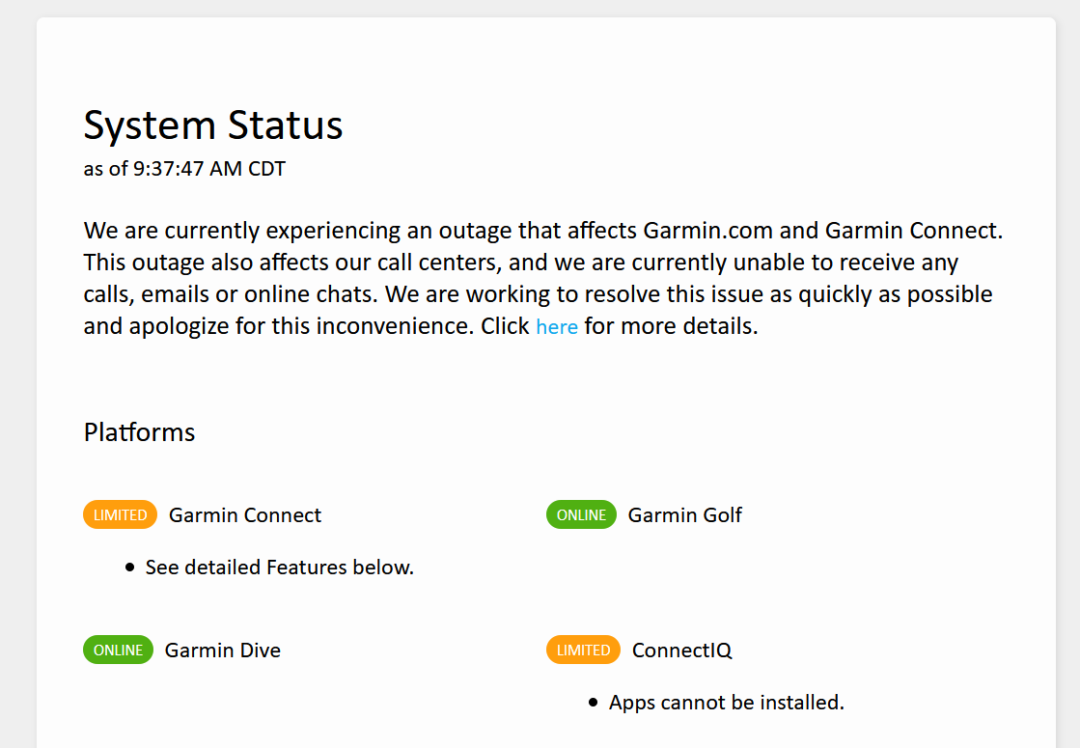

It was a hellofa bender, but after four days of hard partying, Garmin is back online. Well, sort of, they still have a a massive hangover, or at least that’s what it looks like because while they’re “online” many systems are only partially active.

Given that the word on the street is that their “bender” was actually ransomware, it could be a while before everything is fully operational, and we learn the scope of the issue. If it was indeed an attack, who knows what was compromised, and how they recovered from it.

Of course, the $10,000,000 question is whether they paid (assuming of course that it was ransomware), or it took them four days to access, and execute a DR (disaster recovery) plan. Given the size, and age of their organization, I think it’s unlikely that every system was affected because it’s probably that there is a massive amount of diversity in the infrastructure. But, it’s also likely that they have quite a few systems that were behind on patching, or completely unsupported (W2K3 FTW!); which if it is the case, definitely a self-inflicted wound*. Hopefully, we do get a transparent telling of what went down, but that’s probably a pipe dream.

* To be 100% clear, the intent here is not to victim blame. If this was a hostile action, those responsible should be held to account. It’s unfortunate that mitigating security issues is a necessary thing, but in reality – it is a thing. An often overlooked thing in corporate IT because patching is risky, and migrating off of unsupported infrastructure is difficult and costly. That doesn’t make it less of a necessity however. Just as we put locks on our physical doors, good policy around patching, network design, etc. are an important part of securing digital assets from baddies. Nothing we can do will create 100% assurance, but that’s not really the point either. Security, whether physical or electronic, is about raising the bar of inconvenience enough to move the cross-hairs to a different target. As crap as that reality is; it is what it is.